Welcome back to Agentic Coding Weekly. This week Paul Willot shares his Claude Code setup and planning-execution-expansion workflow loop.

Here are the updates on agentic coding tools, models, and workflows worth your attention for the week of Jan 11-17, 2026.

1. Tooling and Model Updates

Claude Code

Claude Code 2.1.7 added MCP Tool Search to dynamically load tools into context.

When MCP tool descriptions exceed 10% of the context window, they are automatically deferred and discovered via the MCPSearch tool instead of being loaded upfront.

Reduces context usage for users with many MCP tools configured.

Ultrathink keyword doesn't do anything in Claude Code anymore. Extended thinking is enabled by default and thinking budget can be controlled by setting

MAX_THINKING_TOKENSenv var.From the docs: Phrases like “think”, “think hard”, “ultrathink”, and “think more” are interpreted as regular prompt instructions and don’t allocate thinking tokens.

Claude Code now allows pressing tab to add more instructions when accepting/rejecting a permission prompt.

After accepting a plan, Claude Code now automatically clears the context, and the plan gets a fresh context window.

OpenCode

OpenCode versions older than v1.1.10 have a CVE allowing any website visited in a web browser to execute arbitrary commands on the local machine. Make sure you are using v1.1.10 or newer.

GitHub now officially supports using Copilot Pro, Pro+, Business, or Enterprise subscription with OpenCode.

2. Workflow of the Week

This week's workflow is from Paul Willot, Senior Machine Learning Engineer at Liquid AI. Over to Paul:

I'm very much a terminal guy, so I focus on using CLI tools in a way that maintains control over the output.

My Tool Stack

I primarily use Claude Code in the terminal for actual coding, complemented by ChatGPT in the browser for research and planning. Occasionally I'll use Gemini for drafting UI parts since I've found it much better at spatial understanding.

For quick read-only exploration of a new codebase, I like using Gemini 3 Flash which has an impressive price/speed/quality balance. Used to call it through aider but switched to charmbracelet/crush in the last few months.

Claude Code Setup

I keep Claude Code largely vanilla, using a few MCP servers sporadically. I'm not currently using Agent Skills. Tried it when it was first introduced but found it hard to robustly control model behavior. Instead, I prepare a Makefile with self-documented targets, which are runnable by both agents and humans. It allows me to precisely control how the model runs and tests the codebase. I also pre-approve most tools to Claude and restrict access to specific files using standard file system permissions.

I mostly draft the CLAUDE.md for projects manually, but when I let it be auto-generated I review and trim it down cause LLMs like to be too exhaustive and include irrelevant details, which hurts focus down the line.

New Project Workflow

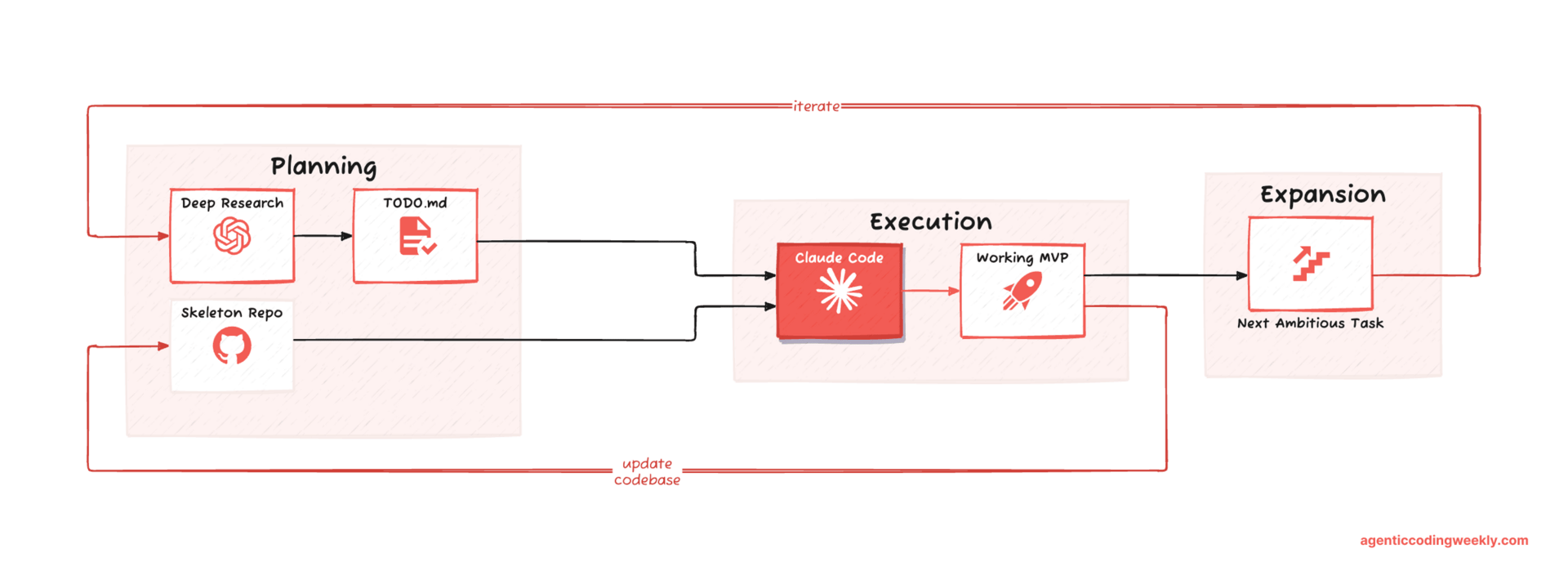

Planning: I start a ChatGPT deep research session to gather information and tools around the problem I'm tackling. Simultaneously, I also set up a skeleton repo with basic structure and environment setup. This GitHub repo shows my typical project structure for a Python project.

Execution: I use the deep research results to manually fill an initial TODO.md, then ask Claude to tackle the steps in order. I aim for the smallest possible MVP and review code and results frequently. I constrain the model fairly heavily in how to go about implementation at this stage.

Expansion: Once happy with the initial shape, I add tests and setup tooling for the agent, like playwright-mcp for projects with web UI parts. Then I add more ambitious tasks to the TODO.md, reset context, and let the agent run longer with less hand-holding.

When Things Go Wrong

When the model is stuck or things don't go as I like, instead of adding more instructions I often reset context and have the model work on a sub-problem first, then integrate that solution into the broader project. If that's still not working... I just do it myself!

— Paul

3. Community Picks

Scaling Long-Running Autonomous Coding

Cursor built an experimental browser engine from scratch using GPT-5.2 to understand the feasibility of running coding agents autonomously for weeks. The key innovation was moving from flat organization of agents with self-coordination through a shared file to a structured, hierarchical pipeline with specialized roles: Planners, Workers, and a Judge.

There was some initial skepticism about the implementation but the codebase compiles now and can render webpages.. Read the post and the HN discussion.

Don't Fall Into the Anti-AI Hype

antirez, creator of Redis, on the current reality of writing code with AI. In a few hours, he modified his linenoise library to support UTF-8, fixed transient failures in Redis tests, wrote a C library for inference of BERT-like models, and more. He discusses how he feels about his code being used for LLM training, impact on jobs, and why skipping AI is not the solution as an engineer.

Key quote: "It is simply impossible not to see the reality of what is happening. Writing code is no longer needed for the most part. It is now a lot more interesting to understand what to do, and how to do it (and, about this second part, LLMs are great partners, too). It does not matter if AI companies will not be able to get their money back and the stock market will crash. All that is irrelevant, in the long run. It does not matter if this or the other CEO of some unicorn is telling you something that is off putting, or absurd. Programming changed forever, anyway."

Read the post and the HN discussion.

A Better Way to Limit Claude Code Access to Secrets

Shows how to use Bubblewrap, a lightweight Linux sandboxing tool, to secure AI coding agents like Claude Code, preventing them from accessing sensitive files like .env or executing dangerous commands. Read the post and the HN discussion.

Ask HN: How Do You Safely Give LLMs SSH/DB Access?

OP is using Claude Code for DevOps tasks, including SSH and database interactions, but finds the need to manually review every command tedious. Wants to give agents more autonomy over SSH by whitelisting safe commands (e.g., ls, grep, SELECT) while strictly prohibiting dangerous ones (e.g., rm, DROP).

Suggestions from the community include using a local dev database, using principle of least privilege for credentials given to LLMs, using tool calling with guardrails baked in the tool, and "just don't do it"!

Read the HN discussion.

A Deep Dive on Agent Sandboxes

Deep dive into the sandboxing architecture of the Codex CLI, which uses OS-native mechanisms (macOS Seatbelt and Linux Landlock/seccomp). Read the post and the HN discussion.

That’s it for this week. I write this weekly on Mondays. If this was useful, subscribe below: