Welcome back to Agentic Coding Weekly. This week's workflow is from Christoph Nakazawa on running multiple Codex session with fire-and-forget approach.

Here are the updates on agentic coding tools, models, and workflows worth your attention for the week of Jan 4 - 10, 2026.

Tooling and Model Updates

Anthropic started blocking third‑party coding harness tools (OpenCode, pi, etc.) from using Claude models via Claude Pro/Max subscriptions. These subscriptions now only grant access to Claude Code. Third-party tools can still use the API directly, but you'll need to pay separately for API access rather than using your subscription.

OpenAI is taking the opposite approach. They're working with third-party tools including OpenCode to let Codex subscription holders use their credits and usage limits directly in those tools.

Claude Code 2.1.0 added skill hot-reload. Skills you create or modify in

~/.claude/skillsor.claude/skillsare now immediately available without restarting your session. This release also added alanguagesetting to force the model to respond in a specific language (e.g., Japanese, Spanish).

Workflow of the Week

This week's workflow is from Christoph Nakazawa, CEO at Nakazawa Tech.

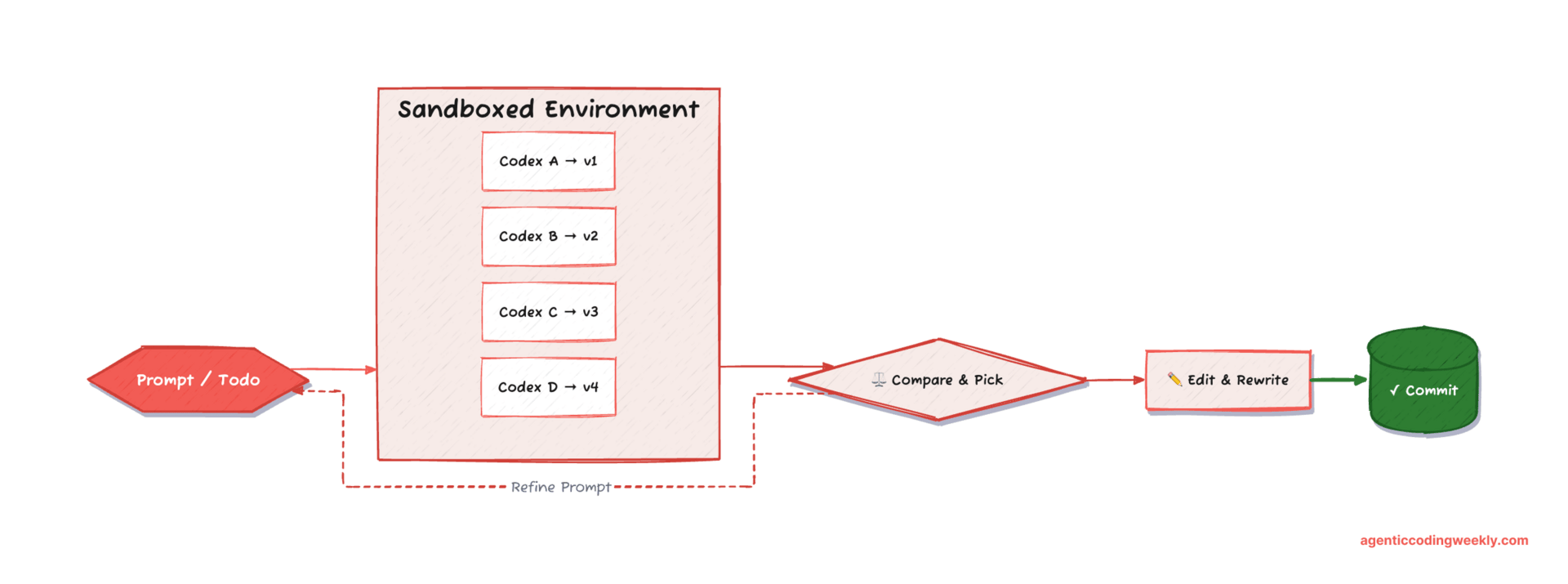

The Fire-and-Forget Approach to Agentic Coding

Christoph primarily uses ChatGPT or Codex web. He's tried Claude Code and some open models, but never vibed with any of them. He prefers to stay in control, not a fan of terminal agents touching files on his computer. He wants agents to work in their own environment and send him the results for review.

The killer feature of Codex on web is the ability to specify how many versions of a solution you want. When I was building fate, I asked for 4 versions for anything slightly complex. As a result, I barely trust single-response LLMs anymore.

The approach is fire and forget. He doesn't always know what a solution should look like, and he's not a fan of specs. Code wins arguments. He fires off a prompt, looks at the results, and if it's not right or doesn't work, starts a fresh chat with a refined prompt. Planning happens in a regular ChatGPT chat with a non-Codex Thinking or Pro model.

He typically fires off 3-5 Codex sessions before stepping away from the computer or going to sleep, then works through each solution one by one. When done with a task, he archives all the chats and moves on.

A few things that he finds useful: turning memory off and starting a fresh chat for every question. Keeping a strict project structure so the model has rails to follow, then heavily editing and rewriting anything it generates before it touches the repo. Getting good at prompting a specific model, as the same prompt doesn't work as well with other LLMs. Building intuition for what it's good at and adjusting when a task takes more (or less) time than expected. Finally, treating the prompt input bar as a replacement for the todo list. Instead of filing a task, he drops the thought into a prompt. The change ships in an hour instead of sitting in a backlog forever.

I write this weekly on Mondays. If this was useful, subscribe below:

Community Picks

Opus 4.5 is going to change everything

Author finds Opus 4.5 delivers on the promise of AI for coding in a way previous models haven't. Lists multiple projects author built using Opus 4.5. The workflow consists of using GitHub Copilot in VS Code with a custom agent prompt along with Context7 MCP. The post includes the custom agent prompt used by the author as well. Read the post and the HN discussion.

How to Code Claude Code in 200 Lines of Code

Shows the implementation of a basic coding agent in 200 lines of Python. Implements LLM in a loop with three tools: read file, list files, and edit file. Read the post and the HN discussion.

How we made v0 an effective coding agent

Vercel's v0 team significantly improved the reliability of their agentic coding pipeline by implementing three key components:

Dynamic system prompt to inject up-to-date knowledge and hand-curated directories with code samples designed for LLM consumption.

Streaming text manipulation to fix common errors without additional model calls like correcting non-existent icon imports.

Post-streaming autofixers including deterministic fixes and targeted fixes using a small, fast, fine tuned model.

That’s it for this week. I’ll be back next Monday with the latest agentic coding updates.