Welcome back to Agentic Coding Weekly. Here are the updates on agentic coding tools, models, and workflows worth your attention for the week of Dec 7 - 13, 2025.

Tooling and Model Updates

GPT-5.2

OpenAI shipped GPT-5.2, the latest in the GPT-5 series. GPT-5.2 Thinking hits 80.0% on SWE-bench Verified, up from 76.3% scored by GPT-5.1 Thinking. For comparison, Gemini 3 Pro sits at 76.2%, GPT-5.1 Codex Max at 77.9%, and Opus 4.5 leads at 80.9%.

Pricing is $1.75 / $14 per million input / output tokens, 40% higher than GPT-5.1's $1.25 / $10, but still lower than Gemini 3 Pro ($2 / $12) and Sonnet 4.5 ($3 / $15). Knowledge cutoff is Aug 2025.

The dedicated GPT-5.2-Codex model isn't out yet, but the base 5.2 model is available now for use in the Codex CLI.

Read the GPT-5.2 announcement and system card.

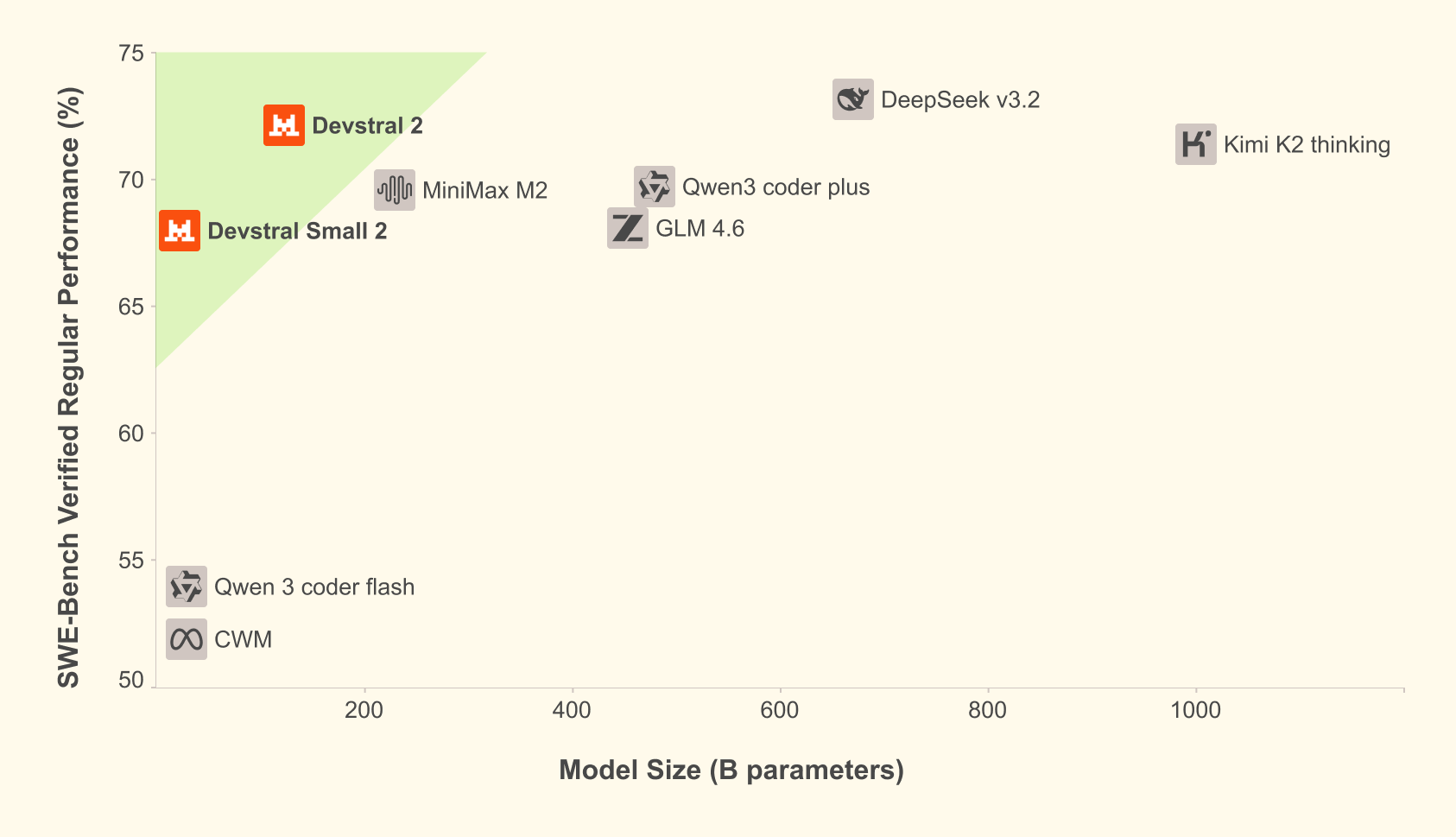

Devstral 2 and Mistral Vibe CLI

Mistral released Devstral 2, their new coding model family in two sizes: Devstral 2 (123B) and Devstral Small 2 (24B). They also released Mistral Vibe, a native CLI agent similar to Claude Code but built specifically for these models.

Devstral 2 scores 72.2% on SWE-bench Verified, very close to the top open-weight model Deepseek V3.2 at 73.1%. Notably, Devstral 2 is more than 5x smaller than Deepseek V3.2, 123B vs 685B parameters.

The more interesting release for local development is Devstral Small 2. It scores 68.0% on SWE-bench Verified despite being only 24B. At 8-bit quantization, it would take about 25-30GB of memory, making it a solid candidate for running on-device on M-series Macs.

Both models have a 256K context window. API pricing is $0.40 / $2.00 per million tokens for Devstral 2 and $0.10 / $0.30 for Devstral Small 2. In contrast, Deepseek V3.2 is priced at $0.28 / $0.42 per million tokens.

Check the announcement and the Vibe CLI GitHub repository.

Quick Updates

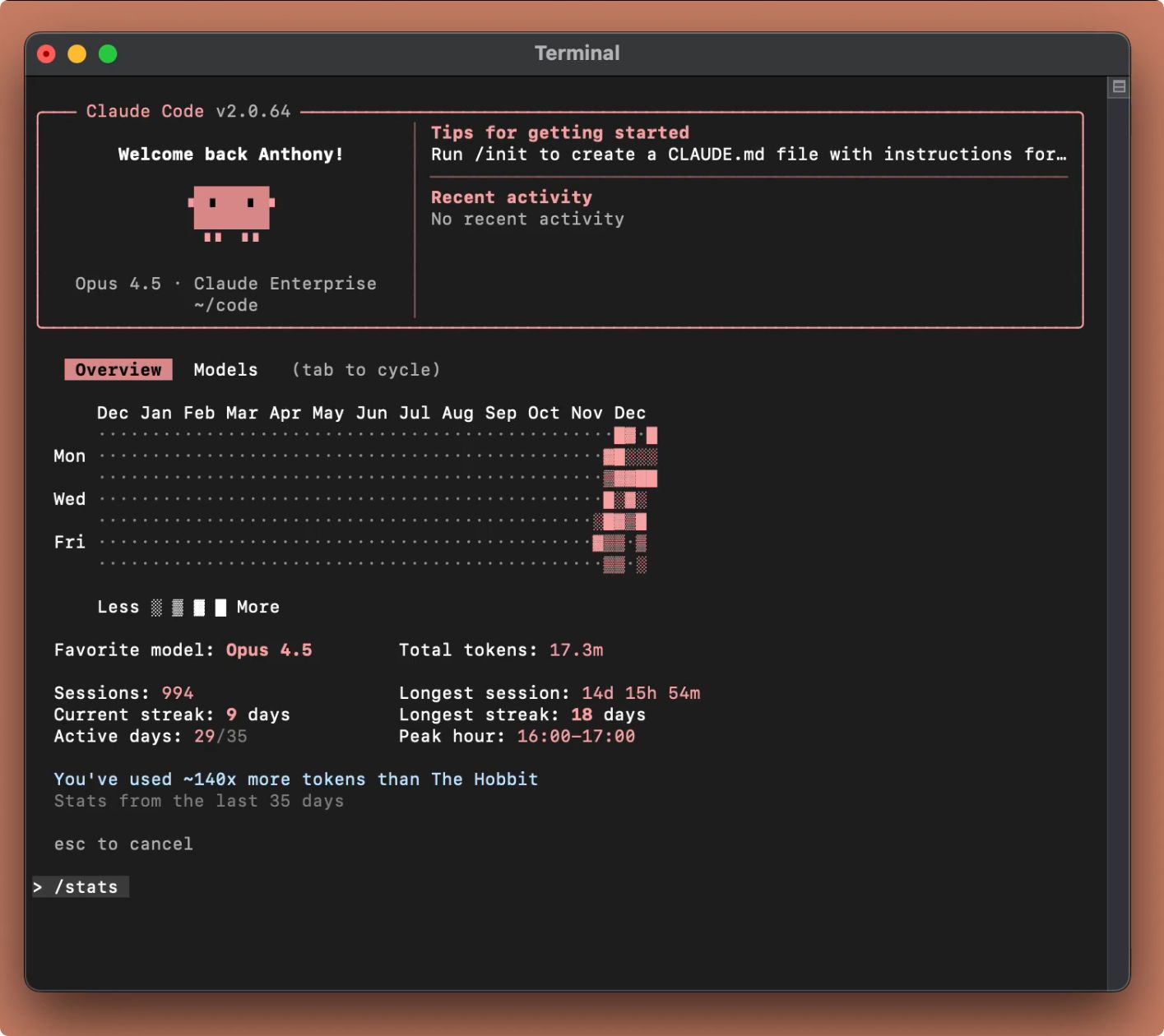

Claude Code /stats: The /stats command now shows usage stats like favorite model, usage graph, and usage streak.

Claude Code in Slack: You can now tag @Claude in Slack to spin up a session. It pulls context from the thread, identifies the relevant repo, and posts a PR link back to the channel when finished. Check the announcement and developer docs.

Subscribe to get weekly updates delivered to your inbox:

Community Picks

Ask HN: How can I get better at using AI for programming?

OP is rewriting an old jQuery + Django project into SvelteKit and asks for tips on improving efficiency and code quality with AI. HN folks respond with workflows and advice, including input from a Claude Code team member. Comments are worth reading.

I found these three recommendation worth repeating here and these are something I practice myself:

If there is anything Claude tends to repeatedly get wrong, not understand, or spend lots of tokens on, put it in your CLAUDE.md.

Use Plan mode to go back and forth until the architecture is solid before letting the model generate any code. If the model fails to one-shot the task, do not argue with it; refine your markdown plan and run it through a fresh session to avoid context poisoning.

Use voice transcription to dictate long-form prompts, as the lower friction encourages you to provide the necessary context that typing often discourages.

Read the HN comments.

From 2 weeks to 2 hours — cutting integration test time using Claude Code Subagents

Airwallex Engineering describes a multi-agent setup on top of Claude Code to generate and maintain integration tests. It cut their test writing time from 2 weeks to 2 hours and produced 4,000+ tests covering 100% of their APIs.

They built specialized subagents and each subagent handles specific test types like happy path, error cases, state transitions, etc. or maintenance tasks like review, debugging, and gap analysis. Claude Code coordinates as the general agent.

The key differentiator was to automatically analyze business requirements directly from the code, instead of relying on documentation like PRDs or manual context which become outdated quickly. Read more.

AI Can Write Your Code. It Can't Do Your Job.

Argues that AI can replace most of programming, but programming isn't the job. The engineering job which encompass requirements definition, architectural judgment, technical debt management, and critical trade-offs, remains human-centric.

The advice for engineers is to leverage AI for productivity while honing non-coding skills like stakeholder communication and system-level judgment. Stay curious, not defensive. Read more.

Claude Code Tips

40+ Claude Code tips from basics to advanced.

My favorite: Claude Code's WebFetch tool can't access certain sites like Reddit. Work around this by creating a skill that tells Claude to use Gemini CLI as a fallback. Gemini has web access and can fetch content that Claude can't reach directly. Read more.

If You're Going to Vibe Code, Why Not Do It in C?

Argues that "vibe coding" (AI-driven development) is inevitable, and we should stop optimizing for human readability. Modern languages enforce safety and ergonomics that benefit human memory and reasoning.

Since LLMs don't struggle with memory management or undefined behavior in the same way, suggests the future might belong to high-performance C or x86 assembly generated by agents, rather than human-centric abstractions. Read more.

Skills vs Dynamic MCP Loadouts

About moving away from dynamic Model Context Protocol (MCP) loading in favor of Skills. The key insight is that skills preserve prompt cache and reasoning traces since they don't change the system message, while dynamic MCP loading still burns tokens and cache.

Author's current approach: maintain skill docs manually and have Claude write and maintain its own Python/bash tools as needed. Both work better than losing tokens to MCP definitions upfront. Read more.

That’s it for this week. I’ll be back next Monday with the latest agentic coding updates.