Welcome back to Agentic Coding Weekly. Here are the updates on agentic coding tools, models, and workflows worth your attention for the week of Nov 30 - Dec 6, 2025.

Tooling and Model Updates

DeepSeek V3.2

DeepSeek released V3.2 and V3.2-Speciale, two 685B reasoning models that land in the same performance band as the top closed models, but with open weights and MIT licensing.

On SWE-bench Verified, DeepSeek V3.2 thinking hits 73.1%, making it the best open-weights model for coding tasks. That beats Kimi-K2 thinking at 71.3%. For comparison, Gemini 3 Pro sits at 76.2%, GPT-5.1 Codex Max at 77.9%, and the recently released Opus 4.5 leads at 80.9%.

Pricing comes in at $0.28 input / $0.42 output per million tokens. That's a fraction of Gemini 3 Pro ($2 / $12), GPT-5.1 ($1.25 / $10), and Sonnet 4.5 ($3 / $15).

Benchmark numbers and pricing make this model a serious option for agentic coding. Read the announcement and the tech report.

Quick Updates

Claude Opus 4.5 is now available in Claude Code for Pro users. Earlier it was only available to Max 5x and 20x plan users.

JetBrains launched JetBrains Air an “agentic development environment” built around defining tasks and reviewing results, rather than free-form chat.

Community Picks

Writing a Good CLAUDE.md

Two key takeaways: First, use progressive disclosure. Instead of including all instructions about building your project, running tests, architecture, code conventions, or other important context in your CLAUDE.md file, keep task-specific instructions in separate markdown files like following:

agent_docs/

|- building_the_project.md

|- running_tests.md

|- code_conventions.md

|- service_architecture.md

|- database_schema.md

|- service_communication_patterns.mdThen reference these from CLAUDE.md as needed. Second, don't use an LLM to do a linter's job. Rely on deterministic tools (formatters, linters, etc.) for style and consistency instead of the LLM. Read more.

How AI is Transforming Work at Anthropic

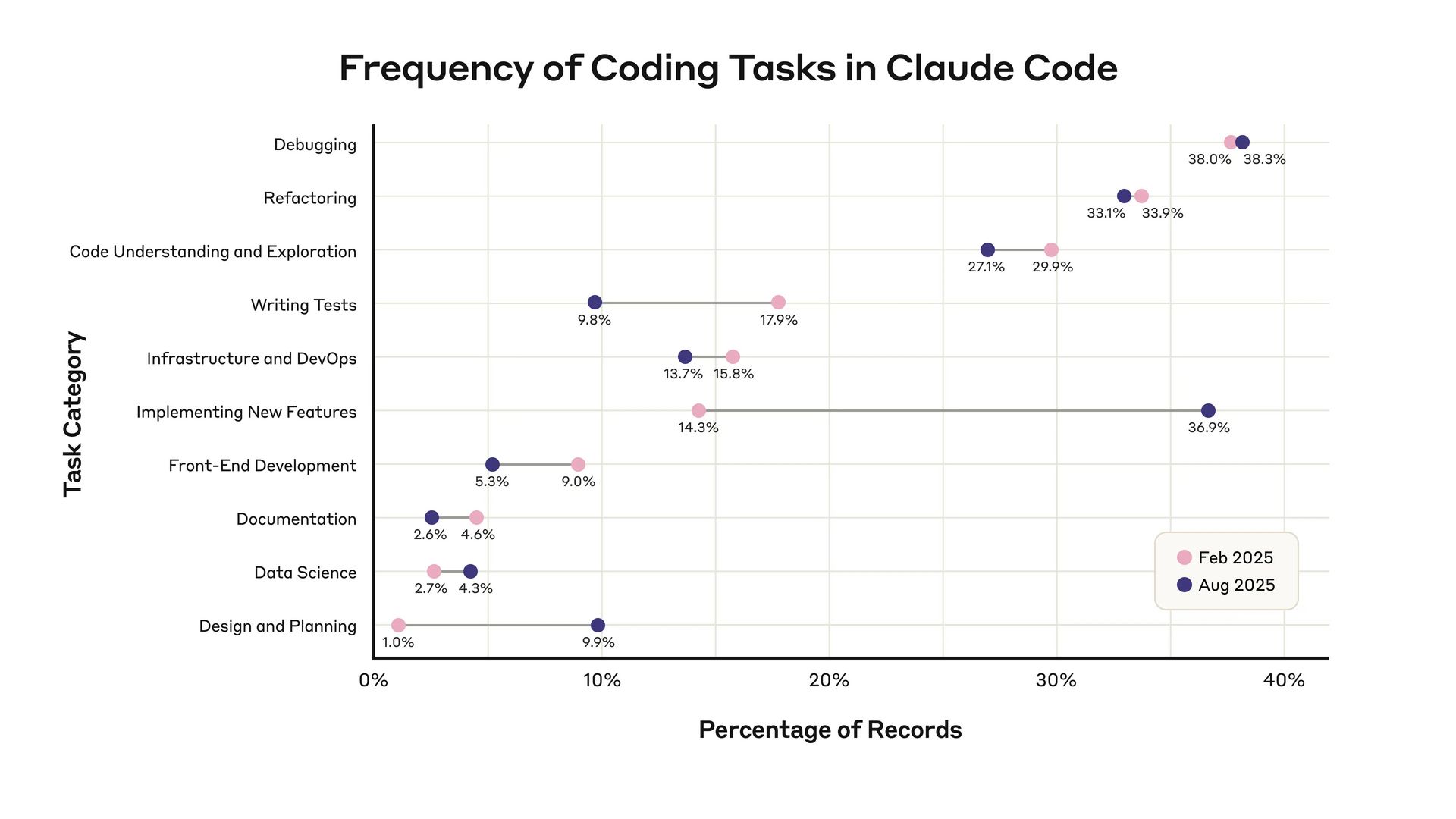

Anthropic published an internal study of 132 engineers and researchers on how AI, and Claude Code, changed their day-to-day work. Unsurprisingly, output is up, but the details on how they use Claude Code are interesting.

Engineers are now giving Claude Code noticeably more complex work. Task complexity (on a 1–5 scale) rose from 3.2 to 3.8 on average, i.e., more multi-step, higher-stakes changes rather than trivial edits.

Debugging, implementing new features, refactoring, and writing tests were the top tasks done with Claude Code. Data Science and documentation tasks were done least with Claude Code.

Usage also varies by team: the Security team uses it heavily for code understanding (analyzing security implications), while the Pre-training team uses it to scaffold new features for experiments.

Read the full study for details.

What I Learned Building an Opinionated and Minimal Coding Agent

Details the creation of 'pi', a minimal, opinionated, and self-built coding agent harness. Driven by frustration with the complexity and lack of control in existing tools like Claude Code and Cursor.

This quote resonated with me a lot cause I like to keep my tools and workflows as simple as possible so that I'm using my cognitive abilities on the task at hand and not on the tool:

I preferred Claude Code for most of my work. It was the first thing I tried back in April after using Cursor for a year and a half. Back then, it was much more basic. That fit my workflow perfectly, because I'm a simple boy who likes simple, predictable tools. Over the past few months, Claude Code has turned into a spaceship with 80% of functionality I have no use for. The system prompt and tools also change on every release, which breaks my workflows and changes model behavior. I hate that.

The post details the architecture required to build your own: a unified LLM API handling multiple providers, an agent loop for tool execution/validation, a TUI for rendering text and updating terminal, and finally a CLI that wires everything together with sessions, custom tools, and project context. Read more.

AI-Assisted Coding Killed My Joy of Programming

Argues that the efficiency of AI coding assistants acts like a cheat code. Initially exciting but ultimately eroding the intrinsic joy derived from manual problem-solving and debugging.

HN comments tell a different story. Many argue it reignited their love of coding. Worth reading both perspectives. Read the post and the HN comments.

Building an AI-Native Engineering Team

Guide from OpenAI about the transformation of the software development lifecycle (SDLC) because of coding agents. Argues that agents are moving from simple code generation to handling all phases of the SDLC: scoping, prototyping, testing, and operational triage.

The key takeaway is that while agents serve as the "first-pass implementer," engineers retain control over architecture and product intent. Read the full guide.

Ask HN: What has been your experience with Agentic Coding?

Short discussion on Hacker News. Notable observation from OP: agentic coding shifts cognitive load from on-demand execution to pre-planned execution. Less about precisely implementing every piece of logic, more about defining the problem space clearly enough that the agent can assemble the solution reliably. Read HN comments.

That’s it for this week. I’ll be back next Monday with the latest agentic coding updates.